Acquire and release fences, in my opinion, are rather misunderstood on the web right now. That’s too bad, because the C++11 Standards Committee did a great job specifying the meaning of these memory fences. They enable robust algorithms which scale well across multiple cores, and map nicely onto today’s most common CPU architectures.

Acquire and release fences, in my opinion, are rather misunderstood on the web right now. That’s too bad, because the C++11 Standards Committee did a great job specifying the meaning of these memory fences. They enable robust algorithms which scale well across multiple cores, and map nicely onto today’s most common CPU architectures.

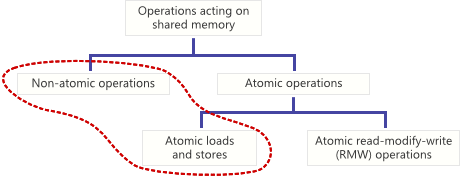

First things first: Acquire and release fences are considered low-level lock-free operations. If you stick with higher-level, sequentially consistent atomic types, such as volatile variables in Java 5+, or default atomics in C++11, you don’t need acquire and release fences. The tradeoff is that sequentially consistent types are slightly less scalable or performant for some algorithms.

On the other hand, if you’ve developed for multicore devices in the days before C++11, you might feel an affinity for acquire and release fences. Perhaps, like me, you remember struggling with the placement of some lwsync intrinsics while synchronizing threads on Xbox 360. What’s cool is that once you understand acquire and release fences, you actually see what we were trying to accomplish using those platform-specific fences all along.

Acquire and release fences, as you might imagine, are standalone memory fences, which means that they aren’t coupled with any particular memory operation. So, how do they work?

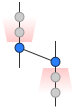

An acquire fence prevents the memory reordering of any read which precedes it in program order with any read or write which follows it in program order.

A release fence prevents the memory reordering of any read or write which precedes it in program order with any write which follows it in program order.

Preshing on Programming

Preshing on Programming In an earlier post, I explained how

In an earlier post, I explained how