When optimizing code for multiple CPU cores, sometimes you need to write a new synchronization primitive. I don’t mean to encourage it, but it does happen. And if you’re going to do it, you might as well start by looking at a few examples. This won’t save you from shooting yourself in the foot, but it may help reduce the number of times, so you can walk away with a few toes remaining.

In my previous post, I showed how to implement a synchronization primitive known as the Benaphore in C++ on Win32. The Benaphore is not lock-free (being a lock itself), but it does serve as a simple yet instructive example of writing a synchronization primitive in user space. It also offers very low overhead when there’s no lock contention.

One limitation of the implementation I showed was that it was non-recursive. This means that if the same thread attempts to obtain the same lock twice, it will deadlock. In this post, I’ll show how to extend the implementation to support recursive locking.

One limitation of the implementation I showed was that it was non-recursive. This means that if the same thread attempts to obtain the same lock twice, it will deadlock. In this post, I’ll show how to extend the implementation to support recursive locking.

Recursive locking is useful when you have a module which calls itself through its own public interface. For example, in a memory manager, you might encounter the following code:

void* MemoryManager::Realloc(void* ptr, size_t size) { AUTO_LOCK_MACRO(m_lock); if (ptr == NULL) { return Alloc(size); } else if (size == 0) { Free(size); return NULL; } else ... } void* MemoryManager::Alloc(size_t size) { AUTO_LOCK_MACRO(m_lock); ... }

AUTO_LOCK_MACRO is, of course, one of those funky C++ macros which obtains the lock and automatically unlocks it when we exit the function scope.

As you can see, if we pass NULL to Realloc, the lock will be obtained once by the Realloc function, and a second time (recursively) when Alloc is called. Obviously, it would be very easy to modify this particular example to avoid the recursive lock, but in large, multithreaded projects, you’re likely to find other examples.

We can extend our Win32 implementation of the Benaphore to support recursive locking as follows. I’ve added two new members to the class: m_owner, which stores the thread ID (TID) of the current owner, and m_recursion, which stores the recursion count.

Expert readers will note that this code does not use the new C++11 atomic library standard. As such, it’s destined to go out of style in the long run. However, this is the style we’ve been using in the game industry since the mid-2000’s. It will compile using any Microsoft compiler, and all Windows-specific calls have equivalents on other platforms.

// Define this to {} in a retail build: #define LIGHT_ASSERT(x) { if (!(x)) DebugBreak(); } class RecursiveBenaphore { private: LONG m_counter; DWORD m_owner; DWORD m_recursion; HANDLE m_semaphore; public: RecursiveBenaphore::RecursiveBenaphore() { m_counter = 0; m_owner = 0; // an invalid thread ID m_recursion = 0; m_semaphore = CreateSemaphore(NULL, 0, 1, NULL); } RecursiveBenaphore::~RecursiveBenaphore() { CloseHandle(m_semaphore); } void Lock() { DWORD tid = GetCurrentThreadId(); if (_InterlockedIncrement(&m_counter) > 1) // x86/64 guarantees acquire semantics { if (tid != m_owner) WaitForSingleObject(m_semaphore, INFINITE); } //--- We are now inside the Lock --- m_owner = tid; m_recursion++; } void Unlock() { DWORD tid = GetCurrentThreadId(); LIGHT_ASSERT(tid == m_owner); DWORD recur = --m_recursion; if (recur == 0) m_owner = 0; DWORD result = _InterlockedDecrement(&m_counter); // x86/64 guarantees release semantics if (result > 0) { if (recur == 0) ReleaseSemaphore(m_semaphore, 1, NULL); } //--- We are now outside the Lock --- } };

As in the original Benaphore, the first thread to call Lock will take ownership without making any expensive kernel calls. It also performs some bookkeeping: It sets m_owner to its own TID, and m_recursion becomes 1. If the same thread calls Lock again, it will increment both m_counter and m_recursion.

Correspondingly, when the same thread calls Unlock, it will decrement both m_counter and m_recursion, but it will only call ReleaseSemaphore once m_recursion is decremented back down to 0. If m_recursion remains greater than 0, it means that the current thread is still holding the lock in an outer scope, so it’s not yet safe to relinquish ownership to other threads.

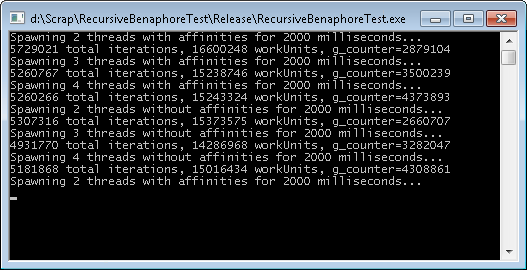

Now, if you scour the Internet, you’ll find it’s full of broken lock-free code and synchronization primitives. So why should you believe the code here is any different? For one thing, it’s been stress tested. In my opinion, that’s the most valuable thing. I’ve written a small test application which spawns various numbers of threads, each hammering on this lock at random times and with random recursion depths. It updates some shared data within each lock and performs various consistency checks. You can download the source code here.

And for good measure, here’s a TryLock method.

bool TryLock() { DWORD tid = GetCurrentThreadId(); if (m_owner == tid) { // Already inside the lock _InterlockedIncrement(&m_counter); } else { LONG result = _InterlockedCompareExchange(&m_counter, 1, 0); if (result != 0) return false; //--- We are now inside the Lock --- m_owner = tid; } m_recursion++; return true; }

For those interested in the fine details, here are a few in particular:

-

In

RecursiveBenaphore::Unlock, it’s important to setm_ownerback to 0 before calling_InterlockedDecrement. Otherwise, data corruption is possible. For example, suppose there are two threads with TIDs 123 and 456. Thread 123 has just completed a call toUnlock, leavingm_ownerset to 123. The following could happen next:- Both threads simultaneously enter

RecursiveBenaphore::Lock. - Thread 456 performs

_InterlockedIncrement, gets 1 as the result, and therefore skips theWaitForSingleObject. - Thread 123 performs

_InterlockedIncrementand gets 2 as the result. - Thread 123 checks and sees that

id == m_owner, because thread 456 hasn’t changed it yet. Therefore, it also skips overWaitForSingleObject.

Shortly thereafter, both threads will return fromLock, each believing it owns the lock. The data protected by the lock will likely become corrupted. Indeed, if you download the test application and delete this part ofRecursiveBenaphore::Unlock, it will fail pretty quickly. - Both threads simultaneously enter

-

Also in

RecursiveBenaphore::Unlock, the value ofm_recursionis copied to a local variable exactly once, and used locally from that point on. We would not, for example, want to re-read the value ofm_recursionagain after_InterlockedDecrement. At that point, another thread could have changed it. -

You may notice that

m_recursionis modified without using any atomic operations. That’s because between the call to_InterlockedIncrementinLockand_InterlockedDecrementinUnlock, the thread owning the lock has exclusive access to bothm_ownerandm_recursion, with all the necessary acquire and release semantics. Using atomics onm_recursionwould be unnecessary and wasteful.

How is the last point guaranteed? It’s guaranteed by the semaphore in the slow case, and the atomic instructions in the uncontended case. On x86 and x64, the _InterlockedIncrement call generates a lock xadd instruction, which acts as a full memory barrier, guaranteeing both acquire and release semantics. This property is unique to x86/64. If you port this code to a dual-core iOS device, like the iPad 2, it wouldn’t be enough to call OSAtomicIncrement32 in place of _InterlockedIncrement. You’d have to call OSAtomicIncrement32Barrier to have similar guarantees. Even on Xbox 360, which shares the Win32 API but runs on PowerPC, the correct function to call is actually InterlockedIncrementAcquire.

I may have lost a few readers by this paragraph. Hopefully, it’s an exciting kind of lost. I’ll talk more about memory access semantics in a future post.

For those who haven’t delved into writing synchronization primitives, perhaps the RecursiveBenaphore has offered a glimpse into how delicate such code can be. Every small detail is there for a reason, ordering is critical and hidden guarantees are at play.

Preshing on Programming

Preshing on Programming