When you create a 32-bit application in Visual C++ 2008, the Release configuration comes with exception handling, buffer security checks, and _SECURE_SCL enabled by default. In the next few posts, I’ll illustrate the cost of each of these features, using wordcount.exe from Hash Table Performance Tests as a test application.

The cost of exception handling is not exactly news. Several years ago, at a training session for console game developers, we were advised to disable it in our engines. Basically, we were told that by enabling this feature, and not even using it, we were adding overhead across the entire executable, and it was a big waste of performance at runtime. Of course, wordcount.exe is just a tiny Win32 application, and not a big console game – but it’s still interesting to take a look at a few benchmarks, and get some idea what they were talking about.

How the Compiler Supports Exception Handling

The reader should already be familiar with exception handling in C++. One important detail, which requires compiler support, is stack unwinding. Any time a C++ exception is thrown (and caught), the system must be prepared to call the destructors of any intermediate C++ variables located on the stack. But how does the system know which destructors to call? The answer is platform- and compiler-specific.

In 32-bit Visual C++, each thread maintains a linked list of exception-handling records in the Thread Information Block – a data structure located at the address specified by segment register FS. The linked list of records starts at FS:[0], and is constantly updated throughout the thread’s lifetime. You can find most of the gory details in this lengthy 1997 article by Matt Pietrek, though I suspect some of the compiler-specific details may have changed since then.

In short, any time the compiler thinks a C++ exception might occur inside a function – or any function it calls – it outputs a few extra instructions which add a record to this linked list. Take the following code snippet:

class StdExtHashMapTester { stdext::hash_map<std::string, int> m_wordCount; void readWords(WordReader &reader) { while (const char *word = reader.getWord()) { m_wordCount[word] += 1; } } }

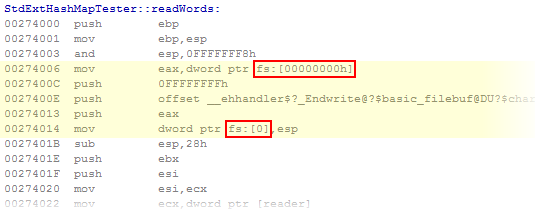

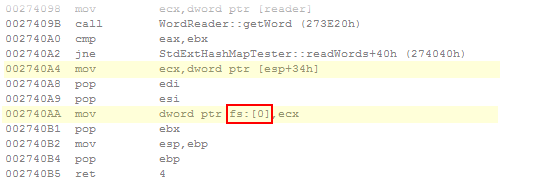

The readWords function does not throw or catch any exceptions. But it does make use of temporary C++ variables (implicitly), and therefore the compiler must emit a few extra instructions in case a stack unwind occurs before this function returns. Those extra instructions have been highlighted in the disassembly listing below. You can always identify them by the fact that they manipulate FS:[0].

Similarly, before the function returns, there are a couple of instructions to remove that record from the linked list.

There are even more instructions in the middle of the function. In total, 10 out of the 52 instructions generated for this function are pure overhead for exception handling support. If you don’t actually use C++ exception handling in your application, those instructions are basically executed for nothing!

Of course, you can turn off exception handling in the project Properties under C/C++ -> Code Generation. You’ll also want to #define _HAS_EXCEPTIONS 0 before including any Standard C++ Library headers, to avoid warning C4530.

Performance Measurements

Now, to measure the impact of this setting on performance. First, I built a “finely tuned” Release version of wordcount.exe, to use a baseline:

- Disabled exception handling, buffer security checks, and _SECURE_SCL.

- Static C/C++ Runtime Library (non-DLL).

- Used DLMalloc for memory management, instead of the default CRT heap. It’s faster.

- Used the default Release settings of Inline “Any Suitable” (/Ob2) and Whole Program Optimization (/GL).

- Disabled Address Space Layout Randomization, as this seemed to change the results on each run.

- Ran outside the debugger in a stripped-down environment, with minimum background services running.

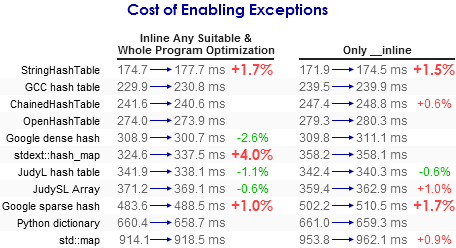

After that, I enabled exception handling, expecting a measurable slowdown. That produced the data in the first and second columns, below.

Finally, I re-ran the entire experiment in a simpler configuration: I disabled Whole Program Optimization, and set Inline Function Expansion to “Only __inline” (/Ob1). This produced the data in columns three and four.

As you can see, a price was paid for enabling exceptions, though not quite as much as I expected. The biggest impact was on stdext::hash_map when Whole Program Optimization was enabled: 4% slower. The other tests in this suite slowed down by a smaller amount, and counter-intuitively, some even became faster!

I poked around and found that in many functions, the compiler was smart enough to determine that no exceptions could possibly be thrown by any other function being called, therefore omitting the extra instructions. It was even better at this when Whole Program Optimization was enabled. Therefore, I wonder if the impact would be more noticeable in a real application with more complexity, such as a game engine.

Why did some tests actually become faster? I wondered about that too. It seems that “Inline Any Suitable” and “Whole Program Optimization” are somewhat unpredictable. Perhaps these settings make different decisions sometimes, offsetting the cost of enabling exceptions. That might explain the results for Google dense hash, but I didn’t look closer.

What about when Whole Program Optimization was disabled? I took a closer look at the small 0.6% improvement for JudyL hash table. In this test, the compiler actually seemed to generate identical code, both with and without exceptions enabled. The only differences were the function addresses. Yet the 0.6% was consistently reproducible. Therefore, I wonder if it had something to do with instruction cache utilization on the CPU. This would make sense, as the test consists entirely of a small set of code executed hundreds of thousands of times – any subtle caching difference would be magnified.

Preshing on Programming

Preshing on Programming